HYPV

HYPV

A unified AI engine delivering hyperpersonalization, automated MVT and voice commerce to enterprise retail sites

enhances large-scale high traffic retail stacks with deeply integrated targeting capabilities and CRO augmentations — includes a safe, API-based upgrade path for legacy platforms

- runs alongside an existing site via API or over it headless

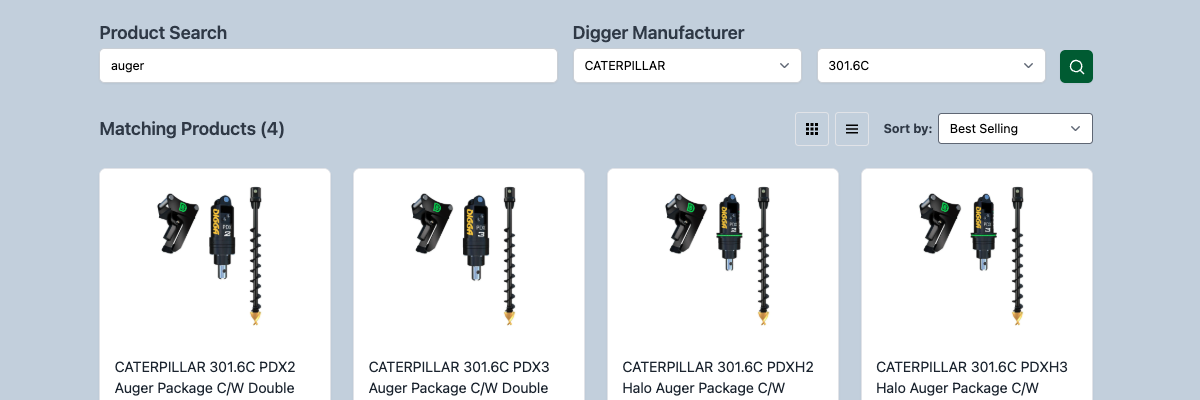

- combines deep product discovery and MVT features within a unified bare metal platform

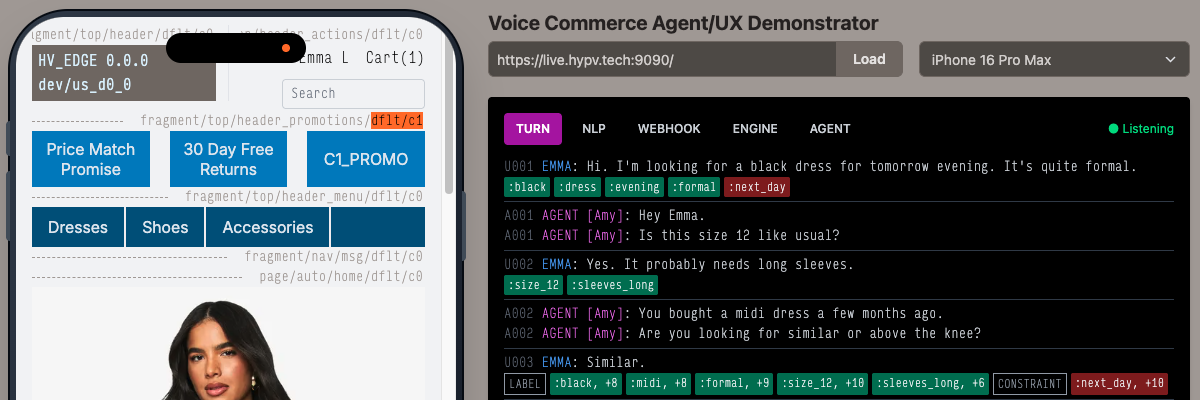

- able to add voice commerce navigation to drive the shopping experience using conversational AI directly as a standalone agent or integrates as a specialized ecommerce targeting tool for your existing LLM

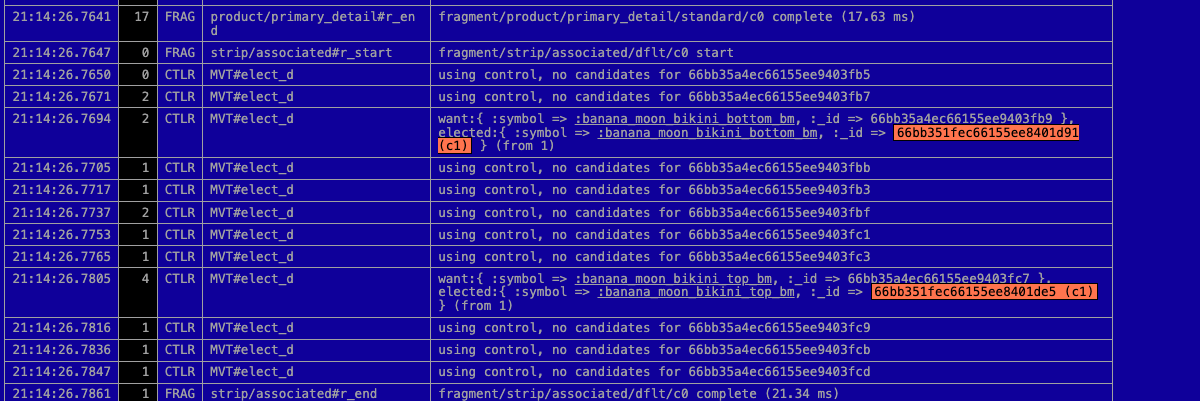

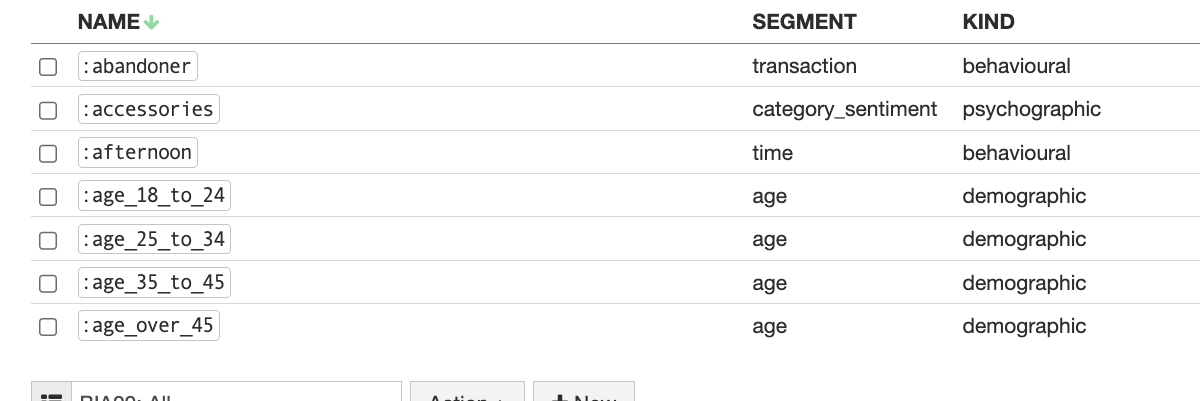

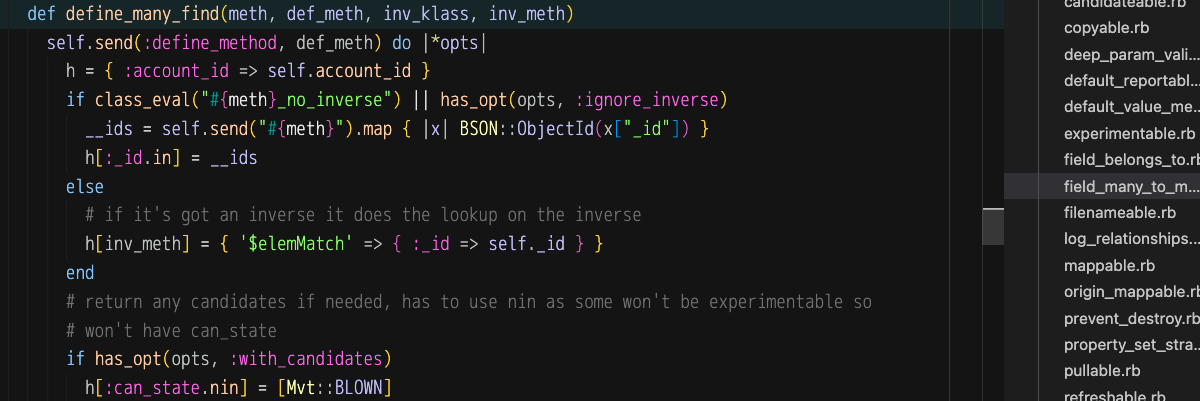

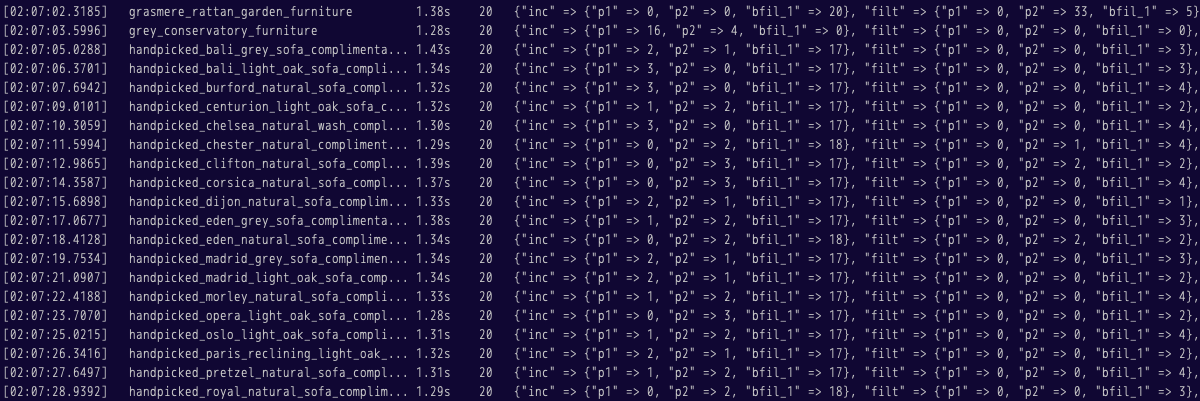

- novel architecture centered around a weighted and velocity-based labeling system

- able to hypervariate all aspects of discovery, code/algos and layout enabling unlimited automated MVT targeting of entire UX surface-area

- entirely unique experiences can be created by cohort such as :high_ltv or :female_30_up without any restriction on what can be varied

- all targeting compute goes through same pipeline which can goal-seek for macro financial scenarios to increase push on aged-stock or to prioritise margin over GMV

- generates a fresh denormalized database for each deployment with all variance baked in — which allows exotic testing scenarios such as an A/B of two completely different category taxonomies concurrently without breaking navigation

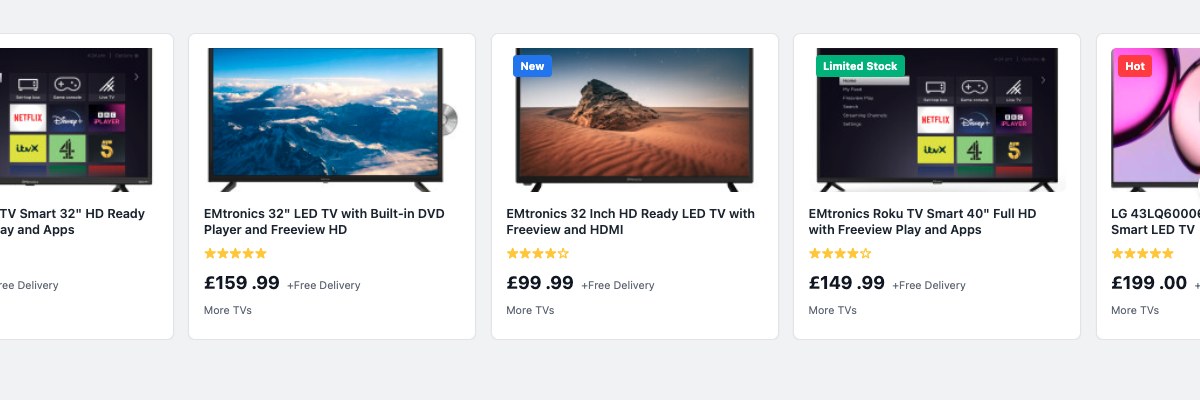

- uses wide data windows to pre-calc recsys caches alongside realtime refinement to render extremely precise hybrid-sets with manually promoted objects interlaced

- operates as light-touch discovery and augmentation for platforms like Shopify+ or extends to handling trickier scenarios like upgrading legacy internal ecom apps — where HYPV can also polyfill wider missing functionality — compatible with any frontend tech such as React or native mobile apps

Distinctly different from off-the-shelf SaaS add-ons (Segmentify, Ometria or Optimizely etc.) — HYPV consolidates all aspects of hyperpersonalization into a single engine which can be modified without limitation to optimize for the exact UX requirements of the specific audience and desired client outcome — no one-size-fits-all

All targeting and augmentations are tuned by HYPV engineers per client — not as a one-time setup, but as an ongoing partnership where we actively share ownership of continuous performance optimization

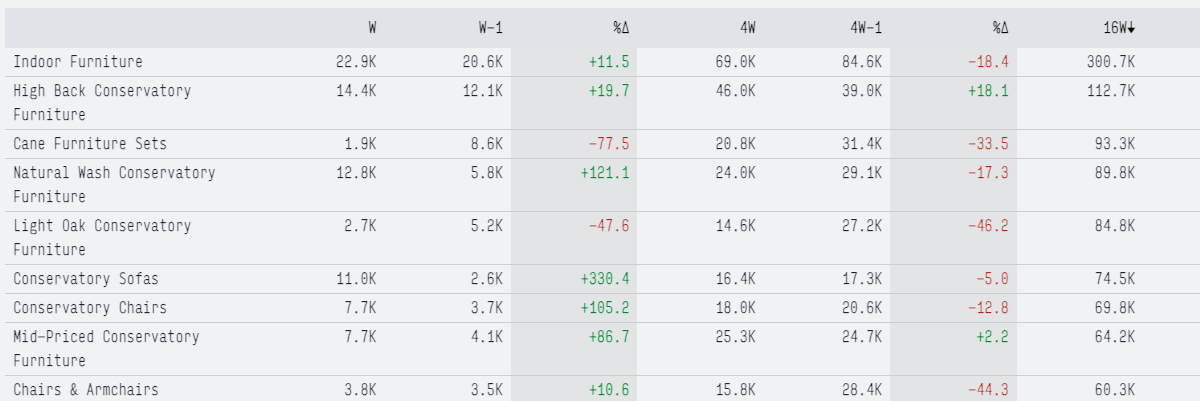

Specifically aimed at retailers that are limited in how much they can vary UX on behavior cues, are able to benefit from unlimited MVTs running concurrently and have a range that can support highly granular segmentation strategies

HYPV targeting can be up and running as an augmentation API in weeks with minimal client resource or disruption as most resource effort involved is vendor-side — pricing is based on resource plus a license fee model, with license costs permanently waived for all trial clients

The optimization process does not stop after integration — contact@hypv.com

Important Information

1. HYPV engine and tooling are intended for enterprise-scale retail situations with a minimum of 5K+ products, 4M+ sessions/month and high potential for repeat visit frequency. A lot of compromises have been made to maximize targeting for those scenarios, in particular around signal collection volume, CMS ease-of-use and proficiency level required of teams involved.

2. Whilst HYPV can feed or receive rich behavior data to/from wider CX tools — the focus is limited to being best-in-class at optimizing UX on-page or in-app.

3. To be viable a client product range needs to have some obviously distinct audience divisions as is common with groceries and fashion sectors or marketplaces. For specialist sites it needs scenarios like fishing with clear sea vs coarse audience behavior splits and high repeat traffic — other sectors like jewellery with less pronounced segmentation are unlikely to benefit.

4. It is a core system component with SaaS elements, not just a simple add-on application. Our expert resources work in close partnership with client operational teams and share ownership of improving performance, ensuring it runs effectively and continually improves over time.